SEO Crawling Meaning: How It Impacts Your Site

When it comes to SEO (Search Engine Optimization), one term that you’re likely to come across often is crawling. Crawling is an essential process that search engines use to discover and understand your website’s content.

If search engines can’t crawl your site properly, it will be difficult for them to index your pages and show them in search results. For anyone who wants their website to rank well on search engines like Google, understanding crawling is crucial.

In this blog post, we’ll explain what SEO crawling means, how it works, and why it matters for your website’s visibility and performance. We’ll also cover best practices to ensure your website is easily crawlable, which is essential for improving your search engine rankings.

In this article:

- What is SEO Crawling?

- Why is Crawling Important for SEO?

- Factors That Affect SEO Crawling

- How to Optimize Your Website for Crawling

What is SEO Crawling?

Crawling is the process by which search engines, like Google or Bing, send out automated bots, also known as crawlers or spiders, to explore the internet. These bots scan websites, follow links, and gather information about web pages.

The data collected by the crawler is then stored and analyzed to help search engines understand the content and structure of websites. This information is used to create a searchable index of the web.

In simple terms, crawling is how search engines find and discover your website’s pages and add them to their database, so they can show them in search results when users search for relevant topics, boosting your online visibility.

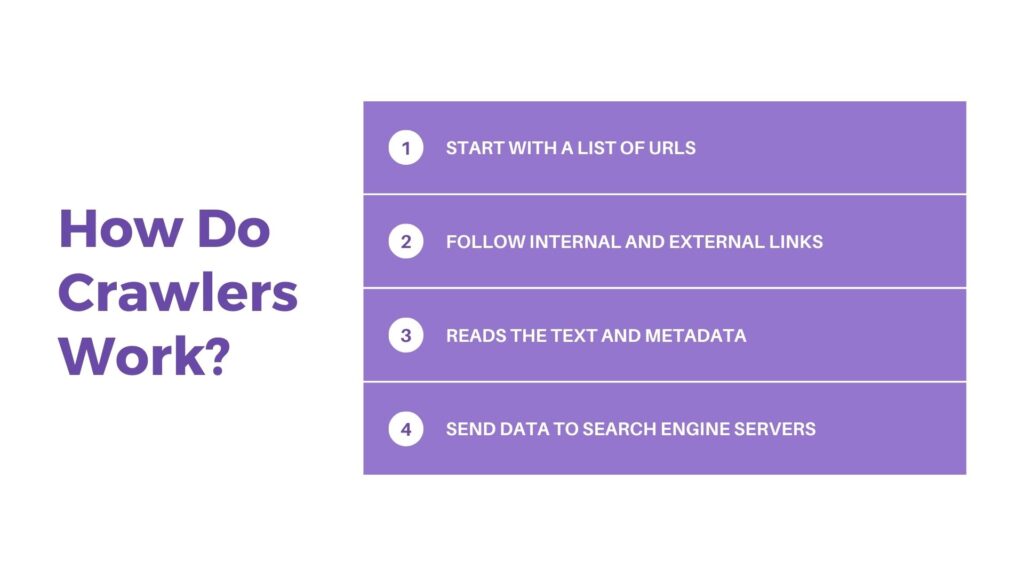

How Do Crawlers Work?

Search engines use crawlers to “read” the content on your site. Here’s a breakdown of how crawlers operate:

- Starting Point: Crawlers start with a list of URLs, often from previously indexed pages or sitemaps that webmasters submit.

- Following Links: As crawlers visit a page, they follow the internal and external links and backlinks on that page to discover new pages to crawl. This is why having a well-organized link structure is crucial for crawling.

- Reading the Content: The crawler reads the text, looks at the metadata (such as page titles and descriptions), and analyzes other elements like images, videos, and keywords.

- Storing Data: The crawler sends the data it collects back to the search engine’s servers, where it is stored, analyzed, and eventually used to determine your site’s relevance and ranking in search results.

It’s important to note that not every page on the internet gets crawled immediately or regularly. The frequency and depth of crawling depend on several factors, including how often your site updates and how authoritative your site is in the eyes of search engines.

Why is Crawling Important for SEO?

Crawling plays a critical role in search engine optimization because it directly affects how search engines index your site and, ultimately, how well your pages rank. If your website isn’t crawled properly, search engines can’t index your pages, meaning they won’t show up in search results.

Here are some key reasons why crawling is important for your SEO strategy:

1. Discovery of New Content

Every time you add new pages or update existing content on your website, crawlers need to find it. If search engines can’t crawl your new pages, they won’t be able to index them, which means your audience won’t see those pages in search results.

For example, if you launch a new product page or post a new blog article, you want it to show up in search results. Ensuring your site is crawlable helps search engines discover and index your content quickly.

2. Improving Search Rankings

Search engines crawl your website to determine the relevance and quality of your content. Crawling allows search engines to analyze your website’s structure, metadata, and keywords, which helps them decide how to rank your pages in search results.

If a search engine can easily crawl your site and understand its content, your site has a better chance of ranking higher for relevant search queries, regardless of the number of results.

3. Indexing Your Website

Crawling is the first step in the indexing process. After a search engine crawls your site, it decides whether or not to include your pages in its index. If a page isn’t indexed, it won’t appear in search results. This means that even if you have high-quality content, users won’t be able to find it unless search engines successfully crawl and index your pages.

4. Detecting Technical SEO Issues

Crawlers can help uncover technical SEO issues that may be preventing search engines from indexing your site properly. Common issues include broken links, slow page speed, and duplicate content. Identifying and fixing these issues ensures that crawlers can access and index all the important pages on your site.

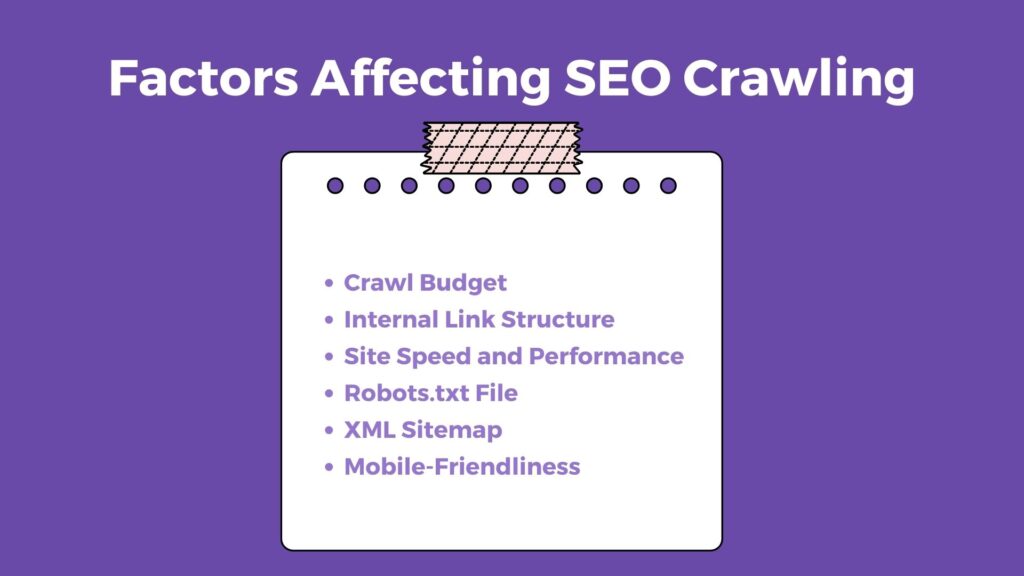

Factors That Affect SEO Crawling

Not all websites are crawled in the same way. Several factors influence how often and how deeply search engines crawl your website. Understanding these factors will help you optimize your site for crawling and ensure your content is indexed properly.

1. Crawl Budget

The crawl budget refers to the number of pages a search engine crawler is willing to crawl on your website during a specific period. The crawl budget is influenced by factors like the size of your website, the number of internal links, and the speed of your website.

If you have a large site, not all of your pages may be crawled immediately, so it’s important to prioritize pages with the most important content. You can help search engines use their crawl budget efficiently by optimizing your site’s structure and ensuring fast load times.

2. Internal Link Structure

A clear and logical internal link structure helps crawlers navigate your site. Crawlers follow links to discover new pages, so if you have a well-organized linking system, crawlers can find and index your content more effectively. On the other hand, a site with poor internal linking may leave important pages undiscovered and unindexed.

Make sure that every page on your site is linked to at least one other page. Use clear, descriptive anchor text for your internal links, and consider using breadcrumb navigation to improve site structure. It may also lend some link juice to the new page.

3. Site Speed and Performance

The speed at which your site loads can impact how much of your content search engines can crawl. If your website loads slowly, crawlers may not crawl all your pages before moving on to other sites. Fast-loading websites provide a better user experience and make it easier for crawlers to explore your site efficiently.

Tools like Google PageSpeed Insights can help you test your site’s speed and identify areas for improvement, such as compressing images, reducing server response times, and enabling browser caching.

4. Robots.txt File

The robots.txt file is a text file that provides instructions to search engine crawlers about which pages to crawl and which to avoid. This file can be used to block crawlers from accessing certain parts of your site that you don’t want indexed, such as admin pages or staging sites.

However, incorrectly configured robots.txt files can accidentally block important pages from being crawled, which can hurt your rankings. Always double-check your robots.txt file to make sure you’re not preventing crawlers from accessing key content.

5. XML Sitemap

An XML sitemap is a file that lists all the important pages on your website, making it easier for search engines to crawl and index them. Submitting a sitemap to search engines like Google can help ensure that crawlers find and index all your essential pages, even if your internal link structure is not perfect.

Sitemaps are particularly useful for large websites or websites with content that isn’t well-linked. You can create and submit an XML sitemap through tools like Google Search Console.

6. Mobile-Friendliness

As more users access the web through mobile devices, search engines have started prioritizing mobile-friendly websites in their search rankings. Crawlers assess the mobile version of your site to determine how well it performs on smartphones and tablets.

Ensuring that your site is responsive—meaning it adjusts to fit different screen sizes—can help improve its crawlability. Google uses mobile-first indexing, which means it primarily crawls the mobile version of your site, so it’s essential to optimize for mobile users.

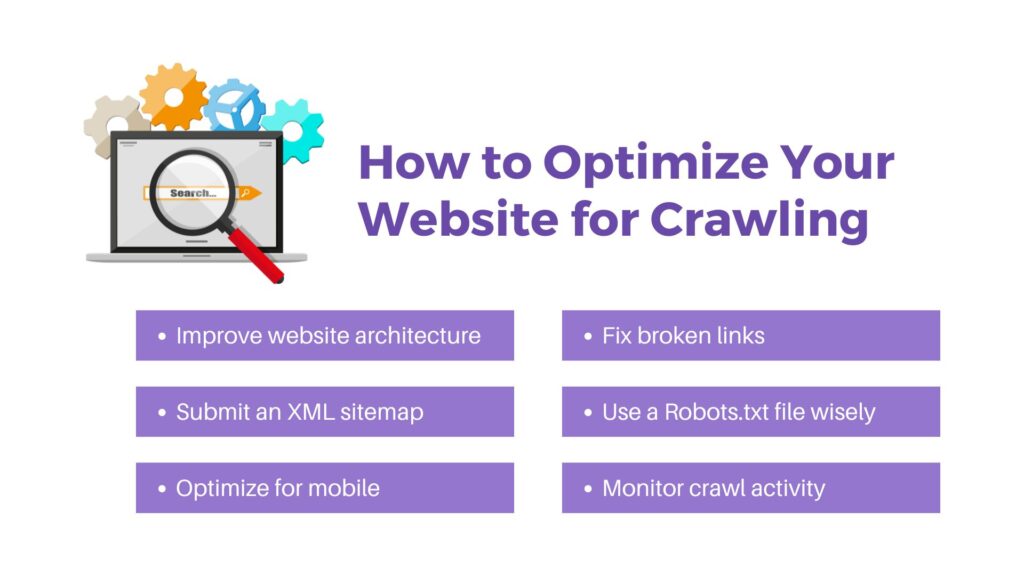

How to Optimize Your Website for Crawling

Now that you understand the importance of crawling for SEO, here are some tips for optimizing your website to make sure it’s easy for search engines to crawl and index.

1. Improve Website Architecture

Create a clear, logical structure for your website that makes it easy for crawlers (and users) to navigate. Each page on your site should be no more than a few clicks away from the homepage. Use internal linking to connect related pages and help search engines understand the relationships between your content.

2. Submit an XML Sitemap

Submit an XML sitemap to Google and other search engines through tools like Google Search Console. This ensures that crawlers can find all your important pages, even if they aren’t linked internally.

3. Optimize for Mobile

Make sure your website is fully mobile-responsive to ensure that it performs well on all devices. Google uses mobile-first indexing, so having a site that works well on mobile is essential for both user experience and crawlability.

4. Fix Broken Links

Broken links can prevent crawlers from accessing parts of your site and disrupt the user experience. Regularly check for broken links and fix them to ensure that all your content is accessible to both users and search engines.

5. Use a Robots.txt File Wisely

Make sure your robots.txt file is correctly configured to allow crawlers to access the pages you want indexed. Avoid blocking important pages or sections of your website by mistake.

6. Monitor Crawl Activity

Use tools like Google Search Console to monitor how search engines crawl your site. This can help you identify any issues, such as blocked pages or crawl errors, and fix them before they negatively impact your SEO.

Get Your Site Discovered

Crawling is a fundamental part of how search engines discover, understand, and rank your website. If your site isn’t easily crawlable, search engines won’t be able to index your content, and it won’t appear in search results.

By ensuring your site has a well-structured architecture, fast load times, and proper use of tools like sitemaps and robots.txt files, you can improve your site’s crawlability and boost its visibility in search results.

To maximize your site’s visibility and authority, complement your SEO efforts with high-quality backlinks through Link Genius. Backlinks play a crucial role in boosting your rankings, and with Link Genius, you can easily discover and secure powerful link-building opportunities to enhance your website’s authority.